Introducing Calamari-OCR: - A High-Performance TensorFlow-based Deep Learning Package for Optical Character Recognition

Calamari is a new free and open-source TensorFlow-based optical character recognition package that uses the most advanced deep neural network (DNN). It mainly focuses on contemporary and historical fonts. It is based on OCRopy and Kraken and is developed such that it will be easy to use from the command line as well as modular to be integrated and customized to any other forms. Calamari is only designed to recognize text which means all other image processing tasks need to be implemented independently using another engine (recommended: OCRopus). It is designed for training and applying OCR models on text lines including several latest techniques to optimize the computation time and the performance of the models.

Since it is based on TensorFlow, it facilitates the usage of DNN along with CNN and LSTM structures that are proven to acquire the best outputs in case of OCR. The usage of the Graphics Processing Unit (GPU) is optional for calamari. In the case of GPU, it can be coordinated with CUDA and cuDNN on supported devices. The use of GPU helps to reduce the computation time for training and prediction. It employs very advanced techniques like voting and pretraining which results in the minimized character error rate (CER).

Calamari is not designed to support the full OCR pipeline. It only recognizes text from a processed line image text. Tasks like segmentation, image processing need to be done independently. However, it can be integrated along with other existing pipelines which makes it easy to use.

Methods Used

As I mentioned before calamari uses different DNN structures, confidence voting of different predictions, pretraining of models, and fine-tuning with codec adaption which helps it to achieve state-of-the-art results on both historical and contemporary prints. The used methods in Calamari are briefly explained below:

Network Architecture Building Blocks

The goal of a DNN and its decoder is to process segmented line image text and give output. This sequence-to-sequence task is trained using the CTC algorithm. The general procedure is if we have an image of size [h * w] with a present alphabet length of [l], then the result will be the matrix of shape:𝑃(𝑥, 𝑙) ∈

ℝ𝑤×|𝐿| with 𝑃(𝑥, 𝑙) which is a probability distribution for all x. At first, the algorithm adds blank labels to any character in the image since it does not know what to predict. The blank label is ignored by the decoder where a greedy decoder is employed to predict the character with the highest probability at each position x. After that the final decoding result is acquired by uniting neighbouring predictions of characters and removing blank labels. For instance, the prediction of JA__YT__ECH is concluded as JAYTECH.The network is trained using the CTC loss function. Fine-tuning and Codec Resizing

Generally, to improve the accuracy of a model on a particular dataset, the model is not trained from scratch instead an existing robust model is used and is finetuned for some particular dataset. Only a few numbers of characters are acquired differently in the base model and the new fine-tuned model. Calamari applies this very approach that is it uses a pre-trained model and finetune it for higher accuracy which also helps to reduce the computation time for prediction and training. This is due to the fact that the initials weights are not randomly chosen but will be based on the base pre-trained model which is meaningful for predictions and only small variations are required to optimize the model.

which is a probability distribution for all x. At first, the algorithm adds blank labels to any character in the image since it does not know what to predict. The blank label is ignored by the decoder where a greedy decoder is employed to predict the character with the highest probability at each position x. After that the final decoding result is acquired by uniting neighbouring predictions of characters and removing blank labels. For instance, the prediction of JA__YT__ECH is concluded as JAYTECH.

The network is trained using the CTC loss function.

Fine-tuning and Codec Resizing

Voting

Another approach to acquire more robust results is voting i.e. to vote the results of different models. The result depends highly on the variance of the voters. Confidence voting resulted in the best results in the field of OCR to date. The figure below shows a simple example of confidence voting. There three different models predict the individual character along with each of their confidence. Among them, if a single voter model chooses a letter with the highest confidence then that letter is winning in a majority vote.

Steps involved in Calamari-OCR System

Calamari is implemented using Python 3 and TensorFlow for Deep Learning of the neural net. Due to this it supports the usage of GPU. It mainly comprises of three steps as follows:

- PreProcessing

- Training

- Prediction

Preprocessing

Calamari can only preprocess the line image text that means an already processed line image text needs to be fetched as input to the calamari engine. After that, the input image is converted into grayscale and is proportionated to a standard height of 48 pixels. Then padding of 16 white pixels is added to both sides. It also supports mixed left-to-right or vice versa texts which solve the mirroring of symbols like brackets and others. It also solves various visual ambiguities.

Training

The default network is composed of two pairs of convolution and pooling layers embedded with a ReLU-activation function, a following bidirectional LSTM layer, and an output layer which predicts the characters in line image text. The first convolutional layer has 64 filters while the second has 128 filters and each of them has a kernel size of 3*3 with zero paddings of 1 pixel.

It uses Adam(learning rate: 0.001) as a standard solver and gradient clipping on the global norm to tackle the exploding gradient problem of the LSTMs.

Prediction

Calamari uses a pre-trained model for predictions. voting is used while predicting the output. Calamari allows establishing information about the confidence and position of each character in line image text as well as the full probability distribution of the input.

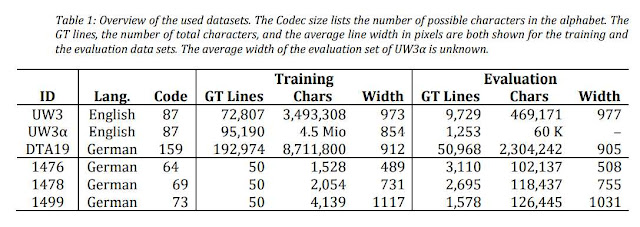

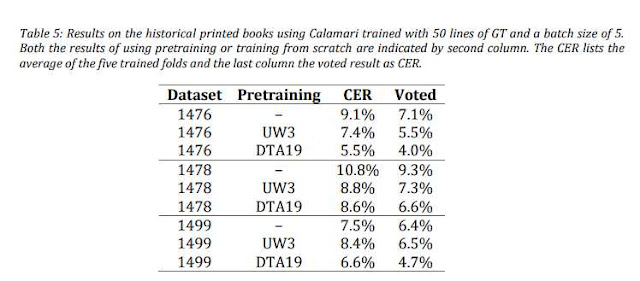

In the official paper of Calamari, an experiment is carried out on datasets like UW3 and DTA19 to compare its performance with its competitors like OCRopy, OCRopus 3, and tesseract 4. The output of this experiment is as shown in the pit=ctures below.

Note: This article is completely based on the original paper on the calamari engine. All the images are taken out of that paper. If you want to dive deep into this calamari OCR please go thoroughly through the original paper.

Also, read in detail about its installation and implementation from its GitHub repository mentioned below.

Simple Implementation of Calamari-OCR

Now let's implement calamari-OCR in google colab. at first lets install calamari-OCR into our colab file:

Simple Implementation of Calamari-OCR

Now let's implement calamari-OCR in google colab. at first lets install calamari-OCR into our colab file:

.

## Let's install calamari_ocr!pip install calamari_ocr.

Now download the pretrained model and unzip it:.

## Download pretrained models!wget https://github.com/Calamari-OCR/calamari_models/archive/1.0.zip# Unzip the trained models!unzip 1.0.zip.Then, lets download some test sample:

.

# download test sample!wget https://d1ly52g9wjvbd2.cloudfront.net/img16/M/O/FF_Modern-Antiqua-RegularA.png -O test.png.Now let's import libraries and a line text image from where characters are to be extracted and visualize it.

.

## Let's visualize imageimport cv2 as cvimport matplotlib.pyplot as pltimg = cv.imread("test.png", 0)plt.imshow(img, cmap="gray").Finally, let's predict our output

.

## Predict!calamari-predict --checkpoint calamari_models-1.0/antiqua_modern/0.ckpt.json --files test.png.Finally let's visualize the predicted output:

.

## See prediction!less test.pred.txt.Here is the link to the colab file.

This is the simple implementation of the calamari-OCR. You can dive deep into it and implement it in much complex systems. Good luck!

References

https://arxiv.org/ftp/arxiv/papers/1807/1807.02004.pdf[Original Paper]https://github.com/Calamari-OCR/calamari[GitHub Repo]

The colab code didn't execute...

ReplyDeleteIts working in my side, Can you be more clear what happened? I am ready to help.

ReplyDelete